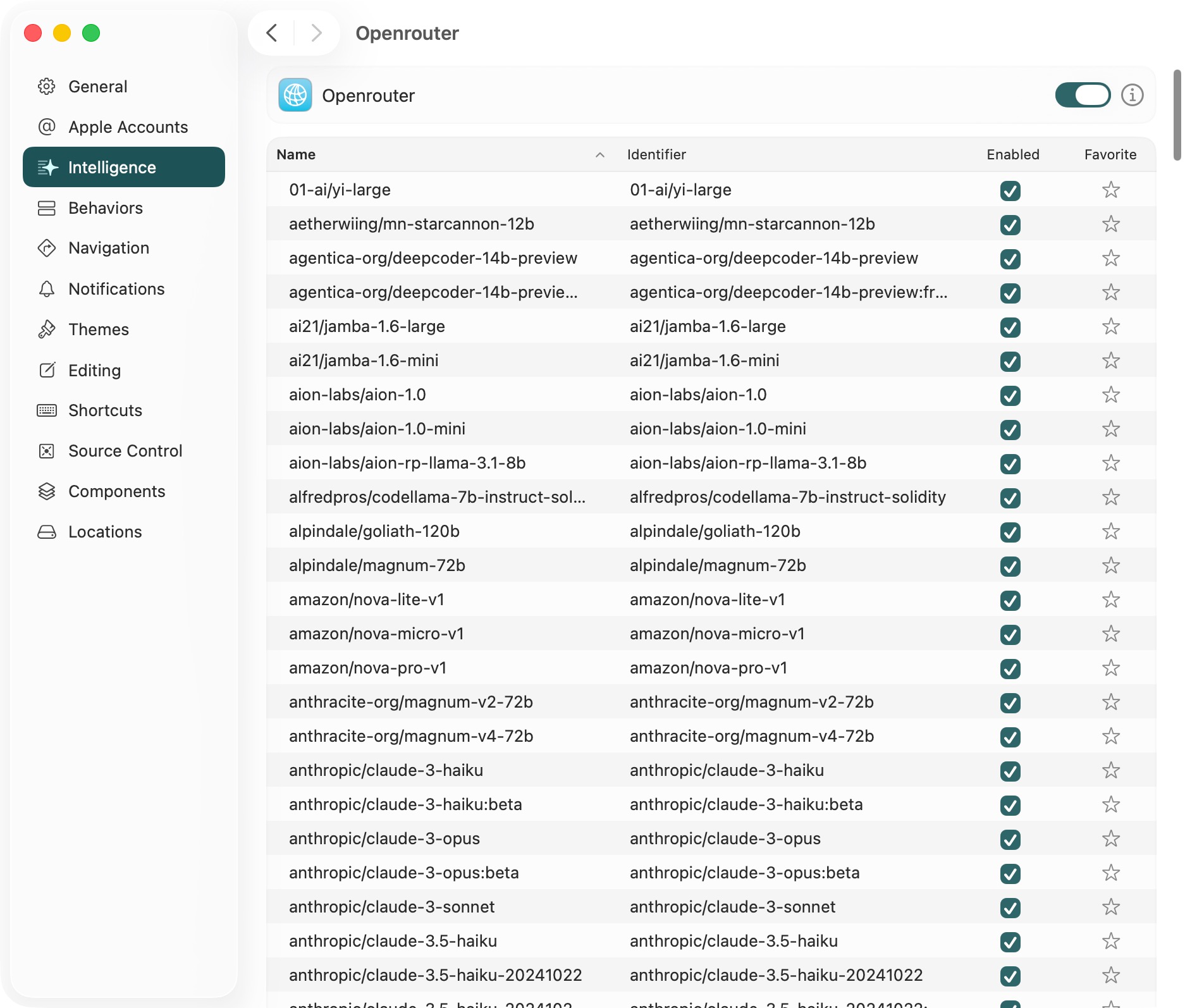

Edit: Xcode 26 beta 4 fixed the OpenRouter integration:

Xcode 26 finally brings something many of us have been waiting for: native support for large language model (LLM) assistants. Sure, it came a bit late—but better than never. What’s more surprising is Apple’s rare openness in this area. Besides the built-in OpenAI integration, Xcode 26 now lets developers connect their own models, including third-party APIs and even local setups like Ollama.

That said, as of beta 3, the custom model feature still feels half-baked. Many popular providers don’t work properly, and the integration UI is hidden and unintuitive. Compared to something like OpenCat (my go-to LLM client), where settings like endpoints and headers are clearly laid out, the Xcode experience is much more opaque.

So I did a bit of digging—and here’s how I got custom models working in Xcode 26.

Limitations (as of beta 3)

So far, only OpenAI and Claude (via official APIs) work out of the box. Another provider I frequently use, OpenRouter, can connect but fails to load the model list. This could be due to the number of available models or an incompatible response format. Google Gemini, on the other hand, is completely unsupported at the moment—their API doesn’t match Xcode 26’s internal expectations.

We’ll take a closer look at what those “expectations” are in the next section.

The Internal Format

Xcode 26 only gives you a few fields to work with when setting up a custom model: • endpoint • key • header • description

Among them, the most important—but also most confusing—are endpoint and header.

endpoint

After inspecting traffic with Proxyman, I found that Xcode appends /v1/models? to whatever endpoint you enter. It uses that URL to fetch the list of available models.

So for example, if you enter this for OpenRouter:

https://openrouter.ai/api/v1/chat/completions

Xcode will actually request:

https://openrouter.ai/api/v1/chat/completions/v1/models

…which obviously fails with a 404.

The fix? Just remove the /v1/… part when entering your endpoint. For OpenRouter, use this instead:

That way, the final request becomes:

https://openrouter.ai/api/v1/models

—which just works.

header

By default, Xcode uses x-api-key as the authentication header. But many providers require a more standard header like:

Authorization: Bearer your-token

So in the header field, just enter Authorization. It’s a small but crucial tweak that makes a big difference—and honestly, a bit of a gotcha.

Final Thoughts

At this stage (beta 3), Xcode 26’s custom model support is far from production-ready. It’s exciting to see Apple move in this direction, but the current implementation has lots of limitations and sharp edges.

Hopefully future updates will smooth things out and make it easier to integrate third-party or self-hosted models.